Countermeasures for access to non-authenticated APIs

Menu As I wrote in my previous article, static sites are more beneficial for corporate and LP sites that have no user-activated functions. …

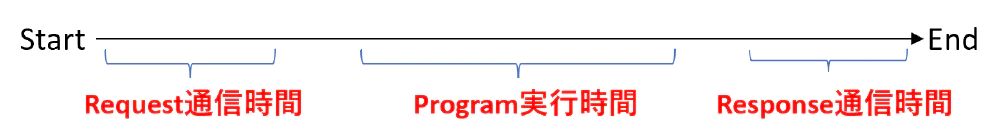

The time between request and response should be as short as possible. It is also desirable in terms of user experience, and nowadays there are many cloud services that charge by the time from request to response (such as GCP’s CloudRun). Especially in the case of microservice design, the time from request to response becomes more important.

In this article, I will show how much time varies depending on the programming language and communication protocol. I’m sure there have been a lot of articles like this, but I’m going to join the mass production. Please note that the following verification is not the fastest optimized program code, as it is implemented in a way that you can usually find on Google.

It’s a very simple calculation, but let’s say you have 10,000 users, and you have a service that makes 1,000 calls per user per year. If we can reduce the response time of the WebAPI by 100ms (milliseconds), we can save about 280 hours in total.

Conversely, you may be taking 280 hours of time away from everyone else by writing very slow program code and choosing communication methods. Moreover, of that time, unnecessary computer processing time is also being spent, which increases the cost. Of course, the response time varies greatly depending on the user’s communication status, but this time we will focus only on the programming language and communication protocol. The execution environment for the API will be GCP’s CloudRun (1cpu 512MB).

First of all, we’ll decide the process to be executed on the server side, and since it’s a pain in the ass to prepare a DB, we’ll do without one this time. And although it’s not a particularly meaningful process, we will assume an API that includes file reading, JSON parsing, and string hash value calculation. The execution time will be measured in each language, and will be the average of three runs. Naturally, all measurements are made in the same environment on CloudRUN.

The version is Nodejs 14.16.1, and the following is only the measurement part.

const crypto = require('crypto');

const fs = require('fs');

const users = JSON.parse(fs.readFileSync('user.json', 'utf8'));

const user = users.find(u => {

return u.id == input_id // User ID

})

const hash = crypto.createHash('sha512').update(input_password).digest('hex'); // User Input Value

To put it simply, user.json contains 10000 user data, which is read and parsed. Then, it hashes the sent password string with SHA512. Normally, something would be done after this, but we’ll measure it in the process up to this point.

Isn’t this a normal process? Yes, we want to measure it in a process that would normally be done.

PHP version is 7.4.3.

$json = file_get_contents("user.json");

$users = json_decode($json, true);

$index = array_search($input_id, array_column( $users, 'id'));

$user = $users[$index];

$hash = bin2hex(mhash(MHASH_SHA512, $input_password));

I’ve heard that PHP has become very fast lately, and the processing speed is still very fast. 7.45ms. The PHP framework has also become very good, as Laravel is very good.

python version is 3.8

import json

import hashlib

with open('user.json') as f:

users = json.load(f)

user = next(filter(lambda u: u["id"] == input_id, users), None)

hash_str=hashlib.sha512(str(input_password).encode("utf-8")).hexdigest()

python is as fast as PHP. As I said, popular languages are faster because the functions in the standard modules are optimized.

In the case of Python, I think the readability of the code is higher than the processing speed. This one code is simple and easy to understand.

Measured with a release build of Dotnet6.

class User

{

public int id { get; set; }

public string hash { get; set; }

}

using (var sr = new StreamReader("user.json"))

{

var jsonData = sr.ReadToEnd();

var users = JsonSerializer.Deserialize<IEnumerable<User>>(jsonData);

var user = users.Where(x => x.id == input_id).First();

byte[] result = SHA512.Create().ComputeHash(System.Text.Encoding.UTF8.GetBytes(input_password));

var hash = "";

for (var i = 0; i < result.Length; i++)

{

hash += String.Format("{0:x2}", result[i]);

}

}

C# is too fucking slow, 54ms, 5 times slower than php or python. When I checked which process was taking so long, I found out that serializing Json was unusually slow.

“NewtonSoft.Json”, which is probably used by many people, is about three times slower. I’m very annoyed because I relatively like C#, but why is it so slow?

In the end, Rust, the language of the conscious mind, is the fastest.

use serde::{Deserialize};

use std::fs;

extern crate crypto;

use self::crypto::digest::Digest;

use self::crypto::sha2::Sha512;

#[derive(Deserialize)]

struct User {

id: u16,

hash: String

}

fn main() {

let content = fs::read_to_string("user.json").unwrap();

let users: Vec<User> = serde_json::from_str(&content).unwrap();

let index = users.iter().position(|x| x.id == input_id).unwrap();

let user = &users[index];

let mut hasher = Sha512::new();

hasher.input_str(input_password);

let hash = hasher.result_str();

}

Fastest Rust. Anyway, it’s fast (3ms). The only drawback is that the code looks a bit redundant compared to Python and other languages. I’m not going to test it this time, but I assume C++ will be as fast as this. However, for a process like this, I think PHP or Python would be fine.

The average of the three runs was as follows.

| NodeJs | PHP | Python | C# | Rust |

|---|---|---|---|---|

| 20ms | 7ms | 8ms | 54ms | 3ms |

Only C# is unusually slow, isn’t it? I’ve questioned the processing and execution environment many times, but I’m pretty sure this is the result. As far as this processing is concerned, Rust is almost 20 times faster than C#. By the way, JSON serialization and deserialization is done very frequently in recent applications, but in a C# environment, it may be costing you a lot of processing time.

The processing speed of the program was the fastest with Rust as expected, but I don’t think the impact on the response time is that big. (Next, let’s see the effect of communication protocol.)

Next, let’s look at the impact of the communication protocol, using the same code as above for the API processing. For the API processing, we used the same code as above, the fastest language Rust, and the same server CloudRun (Tokyo region) as above.

To implement the RestAPI in Rust, use actix_web.

use actix_web::{get, web, App, HttpServer, Responder};

#[derive(Debug, Serialize, Deserialize)]

struct RequestBody {

password: String,

}

#[derive(Debug, Serialize, Deserialize)]

struct ResponseBody {

hash: String,

}

#[put("/{input_id}")]

async fn index(web::Path((input_id)): web::Path<(u16)>, body: web::Json<RequestBody>) -> impl Responder {

let password = &body.password;

// The above process

// omission

HttpResponse::Ok().json(ResponseBody {

hash: hash

})

}

#[actix_web::main]

async fn main() -> std::io::Result<()> {

HttpServer::new(|| App::new().service(index))

.bind("0.0.0.0:8080")?

.run()

.await

}

Then deploy it to CloudRun and measure the response time for RestAPI. This is a common PUT communication, which is sent with ID and JSONBody in the URL parameter.

### RestAPI Response Time Measurement Test

PUT {{endpoint}}/9999 HTTP/1.1

content-type: application/json

{

"password": "thisispassword"

}

Next, let’s try HTTP2 communication with gRPC. gRPC is a bit complicated in Rust, but I implemented it with tonic. First, let’s define the protos file.

syntax = "proto3";

package auth;

service Auth {

rpc Test (RequestBody) returns (ResponseBody){

}

}

message RequestBody {

int64 id = 1;

string password = 2;

}

message ResponseBody {

string hash = 1;

}

Then create build.rs and include protos as a target.

fn main() -> Result<(), Box<dyn std::error::Error>> {

tonic_build::configure()

.type_attribute(".", "#[derive(Serialize, Deserialize)]")

.type_attribute(".", "#[serde(default)]")

.compile(

&["protos/auth.proto"],

)?;

Ok(())

}

Finally, implement the processing part of tonic. (It’s too long.)

use auth::auth_server::{Auth, AuthServer};

use auth::*;

use tonic::{transport::Server, Code, Request, Response, Status};

pub mod auth {

tonic::include_proto!("auth");

}

#[derive(Debug, Default)]

pub struct AuthApi {}

#[tonic::async_trait]

impl Auth for AuthApi {

async fn test(&self, request: Request<RequestBody>) -> Result<ResponseBody, Status> {

let id = request.into_inner().id;

let password = request.into_inner().password;

// The above process

// omission

Ok(Response::new(auth::LoginResponse { hash }))

}

}

#[tokio::main]

async fn main() -> Result<(), Box<dyn std::error::Error>> {

let addr = "0.0.0.0:8080".parse()?;

let auth_api = AuthApi::default();

Server::builder()

.add_service(AuthServer::new(auth_api))

.serve(addr)

.await?;

Ok(())

}

All I had to do was create a gRPC Client and call it, but I also created the client in Rust and measured the time.

use auth::auth_client::*;

use auth::*;

pub mod auth {

tonic::include_proto!("auth");

}

async fn get_grpc_channel() -> Result<Channel, Box<dyn std::error::Error + Sync + Send + 'static>> {

let tls = ClientTlsConfig::new().domain_name(DOMAIN);

let ret = Channel::from_static(CLOUDRUN_URL)

.tls_config(tls)

.unwrap()

.connect()

.await?;

Ok(ret)

}

pub async fn get_client() -> Result<AuthClient<Channel>, Box<dyn std::error::Error>> {

let channel = get_grpc_channel().await.unwrap();

let service = AuthClient::with_interceptor(channel);

Ok(service)

}

pub async fn main() { // Measure the time for this

let request = auth::RequestBody {

id: 9999,

password: "thisispassword",

};

let client_channel = super::client::get_client().await.unwrap();

client_channel.test(request).await?.into_inner();

}

The average of the three runs was as follows. Naturally, this is the average ms of the three runs after the CloudRUN container was activated. Also, there is no server cache.

| RESTApi(Actix) | gRPC(tonic) |

|---|---|

| 182ms | 102ms |

gRPC is really fast! It’s probably because it’s optimized for HTTP2 communication protocol, but the reason it’s so fast is probably because of the difference in client processing and server-side waiting processing. ActixWeb in Rust is famous for its fast RestAPI framework, and it will be even slower if we use Dotnet API in C#.

In the environment we investigated, the difference was up to 51ms depending on the programming language and 80ms depending on the communication protocol. Of course, it will vary depending on the environment and processing. Maybe there is a pattern where Dotnet in C# is the fastest, and there are cases where Python and PHP are very slow in terms of processing. Also, as you can see from the implementation code, Rust is honestly difficult to understand because of the verbosity of the resulting code.

And I think, in the end, it doesn’t matter if it’s a few dozen ms or not. After writing all this, 80ms is probably too short a time for humans to perceive. Moreover, 280 hours of time wasted by all 10,000 people in a year seems like an unimportant measure (I don’t have a double personality). I mean, we all live with a lot more time wasted than that.

Any programming language can produce response times that are fine, even HTTP1’s RESTApi. For example, if 300ms is going to be reduced to 1ms with a lot of effort, I’d like to do a lot of work, but there’s no need to change languages or go to the trouble of changing to gRPC just because 300ms is going to be reduced to 250ms. No one cares about that.

However, if you are too bad at programming the process and it takes 30,000ms to do something that would normally take 300ms, then of course you can’t do it. See below.

Menu As I wrote in my previous article, static sites are more beneficial for corporate and LP sites that have no user-activated functions. …

Menu A website is generally something that is described by a browser loading HTML, CSS, Js, and other files such as images, right? On the …